👨💻 The AI Engineer is Mainstream

Build dope apps. Share them with others. Repeat.

Andrew Ng released some great pieces of advice yesterday in The Batch about how to be a great AI Engineer (emphasis my own).

There’s a new breed of GenAI Application Engineers who can build more-powerful applications faster than was possible before, thanks to generative AI. Individuals who can play this role are highly sought-after by businesses, but the job description is still coming into focus. Let me describe their key skills, as well as the sorts of interview questions I use to identify them.

Skilled GenAI Application Engineers meet two primary criteria: (i) They are able to

use the new AI building blocks to quickly build powerful applications. (ii) They are able touse AI assistanceto carry out rapid engineering, building software systems in dramatically less time than was possible before. In addition,good product/design instinctsare a significant bonus.

~ A. Ng

This “new breed of GenAI Application Engineers” is simply the AI Engineer of 2025.

We may have called this person the “unicorn AI Engineer in 2024,” or the “unicorn data scientist/machine learning engineer in 2023,” but with the rise of AI assistance in coding (e.g., Cursor/Windsurf/etc., Codex/Devin/Manus/etc.), AI assistance in product management (e.g., ChatGPT/Claude, etc.), and AI assistance in design (e.g., Vibe Coding), unicorn is probably a bit extreme here.

More like table stakes.

That is, you should be able to build, understand what you’re building and why, and present it in a way that’s visually appealing to customers and stakeholders.

Then you can command (and indeed, demand) value from the market 📈.

Building 🏗, Shipping 🚢, and Sharing 🚀

When interviewing GenAI Application Engineers, I will usually ask about their mastery of AI building blocks and ability to use AI-assisted coding, and sometimes also their product/design instincts. One additional question I've found highly predictive of their skill is, “How do you keep up with the latest developments in AI?” Because AI is evolving so rapidly, someone with good strategies for keeping up — such as reading The Batch and taking short courses , regular hands-on practice building projects, and having a community to talk to — really does stay ahead of the game.

~ A. Ng

This is exactly right.

Read newsletters (pick your favorite, they’re all the same over the course of a month).

<Aside>—

Here are the AI ones that I read (no affiliation):

Of course, you should also read our newsletter, The LLM Edge, which will keep your building, shipping, and sharing tank topped off!

-<Aside>

Build stuff that interests you.

Ship it, and make it beautiful.

Share about what you read and what you build with others.

In short, the answer to “how do I keep up with the latest developments in AI” is to build 🏗, ship 🚢, and share 🚀 awesome LLM applications as part of a community.

That’s also probably the best answer you can give if Andrew asks you in an interview:

How do you keep up with the latest developments in AI?

Community is the Answer

For 10 years as a university professor I saw that the only students who were succeeding in a 21st-century workforce were those that were learning new things, using the new things they learned to build awesome stuff, and sharing the awesome stuff they built with others.

It wasn’t degrees, prestige, or pedigree; it was making stuff as part of a community that was helping people achieve their dreams.

In 2023, after working for FourthBrain - one of Andrew Ng’s AI Fund porfolio companies - it was clear to me that the solution to the problem of keeping up to date was community.

Of course, you need to be learning and building, but doing this in a vacuum always ended up in people (myself included!) putting together elaborate roadmaps that “one day!” they might finish working their way through.

However, by the time you finish all of those online asynchronous courses, you’ve passed a lot of quizzes and earned a lot of certificates, but did you get that job? Are you making that money? Are you demanding that salary? Do you have the actual skills you need that the market is demanding and can’t get enough of?

In fact, are the skills and techniques you just learned even still relevant?

And this my friends, is the kicker. If it takes you six months to learn enough to be dangerous, that’s simply too long if you’re goal is to start creating value in the real world.

It was with this insight - that structured, practical, constantly-updated curriculum combined with a supportive community of legends was the answer - that Wiz and I started AI Makerspace, a place where we could structure the curriculum to align exactly with what is needed in practice, today, in production, and in the enterprise. Further, we could start creating a real community for people who were nerds like us to come together and build dope apps that they were excited about sharing with others.

Today, AI Makerspace is on a mission to create the world’s leading community for people who want to build 🏗, ship 🚢, and share 🚀 production LLM applications.

People are still building dope apps, like the folks from Cohort 6 that presented last week for Demo Day!

Equally important is that they’re doing it all as part of a community - one that gives them courage to not just build, and not just ship, but also to share.

I’m proud to say that we have created a community where people arrive into the mystical, unicorn, Gen AI application engineer role. In fact, this happens all the time now. The stories we’ve heard are amazing, and I look forward to a rich future of many more!

In other words, we’re minting mainstream AI Engineers.

How?

Let’s talk a bit more about that structured curriculum.

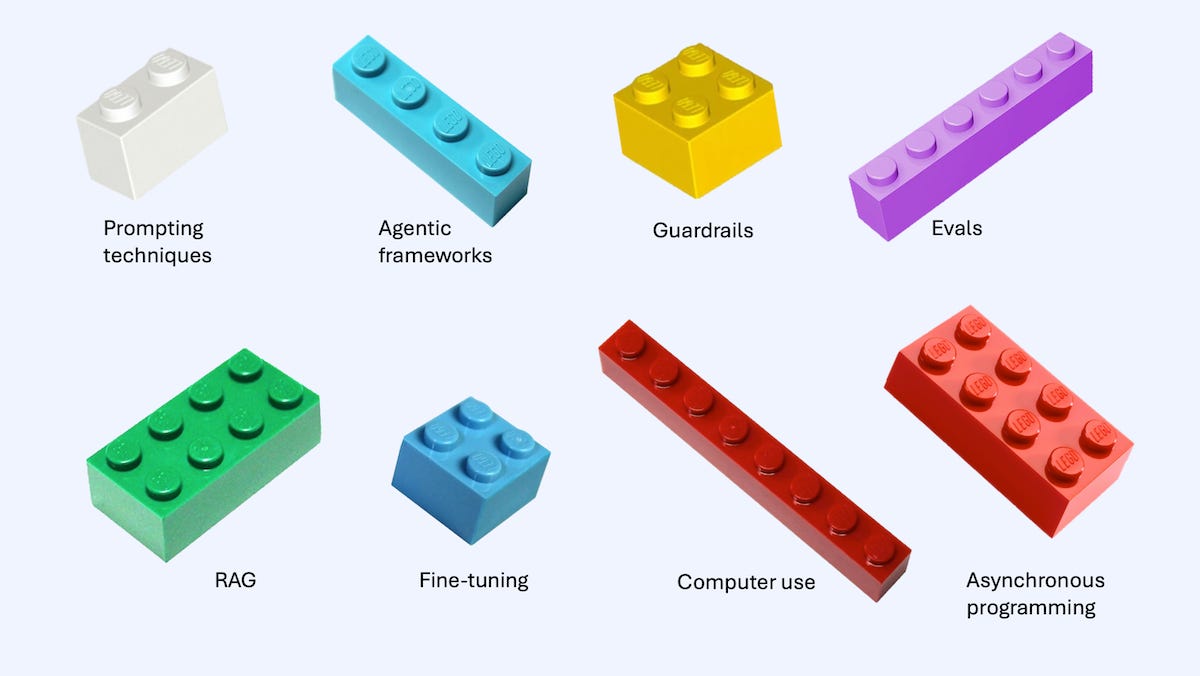

🧱 The Lego Blocks of AI Engineering

The Wiz and I have long harped on lego blocks, and on focusing “outside of the API” when it comes to AI Engineering.

We like to define AIE as:

AI Engineering

The industry-relevant skills that data science and engineering teams need to successfully build, deploy, operate, and improve Large Language Model (LLM) applications in production environments

So how do we get there?

The first pieces are simple:

Yes, this involves Cursor, Vibe Coding, and hitting the OpenAI API. We call this The AI Engineer Challenge, and we require that all students who wish to enroll in our bootcamp complete this challenge first.

<Aside>—

As of this week it turns out that AI can actually DO The AI Engineer Challenge! Check out ChatGPT Codex in action 👇

-<Aside>

Let’s take a look at what Andrew recommends:

AI building blocks. If you own a lot of copies of only a single type of Lego brick, you might be able to build some basic structures. But if you own many types of bricks, you can combine them rapidly to form complex, functional structures. Software frameworks, SDKs, and other such tools are like that. If all you know is how to call a

large language model (LLM) API, that's a great start. But if you have a broad range of building block types — such asprompting techniques, agentic frameworks, evals, guardrails, RAG, voice stack, async programming, data extraction, embeddings/vectorDBs, model fine tuning, graphDB usage with LLMs, agentic browser/computer use, MCP, reasoning models, and so on— then you can create much richer combinations of building blocks.

~ A. Ng

We agree that you should learn to learn how to use the OpenAI API-compatible method to leverage LLMs like a developer. It’s part of our initial challenge!

The rest of this list, however, feels chaotic and unorganized. I disagree that you should learn “prompting techniques” next.

In fact, learning about prompting instead of learning to build apps that require prompting is a key insight that we’ve come across teaching many hundreds of students at AI Makerspace over the past 2 years. When you tell someone to care about prompting, they won’t. When you give them very specific instructions including input, output, and evaluation specifications, they’re forced to not only confront that you’ve just prompted them to create the app, but that the quality of their prompting really affects their outputs and evals! “Aha!” learning moments ensue.

Tell people to care about prompting —> dead breakout rooms.

Tell people to “vibe check” their “AI Engineer Challenge” —> they tell us how they learned that prompting really matters!

I also disagree that you should learn “agentic frameworks.” In fact, we spend the vast majority of our time in our courses teaching only one framework (LangGraph/LangChain). That’s because if you can build like a legend with one framework, the agentic framework problem becomes trivial. That is, you could pick up others if you needed to, but unless you need to, why would you?

Of course, we tried teaching two, and it was more confusing than useful.

Teach two —> “why this one instead of that one?”

Teach one in depth and challenge them to build in another one —> they tell us how they learned that the agent framework doesn’t really matter!

I could go on here to say that if RAG were second in the list, then it might resemble the way we talk about the core patterns of prototyping AI applications; e.g.,

Prompt Engineering

Retrieval-Augmented Generation

Agents

Fine-Tuning

There are many other such quibbles that I might have about this list, but the core takeaway is that it’s a smattering buzzwords, not a roadmap. It’s random lego blocks on the floor, some of which are connected today but might be less or more connected tomorrow and ultimately, it’s a pile of blocks.

Getting too hung up on individual blocks can really take your eye of the ball sometimes. Here are a few examples of ambiguity not likely to resolve itself anytime soon.

Take for instance the emergence of Small Language Models (SLMs). How big is “small?”

Or perhaps consider the emergence of Large Reasoning Models (LRMs). How much reasoning defines the difference between LLM and LRM, and on what benchmark?

Consider that alignment is simply finer fine-tuning, and that the industry has started to lump all of this together into “post-training” while also including techniques like merging and distillation.

These days, reinforcement learning is being applied not just to alignment, but as Reinforcement Fine Tuning and Reinforcement Pre-Training!

We did our damndest to articulate what’s really going on in our fully open-sourced course LLM Engineering, which we define as (and caveat with):

LLM Engineering

Large Language Model Engineering (LLM Engineering) refers to the emerging best-practices and tools for pretraining, post-training, and optimizing LLMs prior to production deployment.

Pre- and post-training techniques include unsupervised pretraining, supervised fine-tuning, alignment, model merging, distillation, quantization. and others.

*LLM Engineering today is done with the GPT-style transformer architecture.

**Small Language Models (SLMs) of today can be as large as 70B parameters.

So what’s the point?

Instead of learning every block you hear about, pay attention (just as the OG paper taught us) to the lego blocks the the community is attending to over time.

🔀 The Intersection of Building and Community

There is one are where I strongly agree with Andrew Ng, and it’s when he says this:

The number of powerful AI building blocks continues to grow rapidly. But as open-source contributors and businesses make more building blocks available, staying on top of what is available helps you keep on expanding what you can build. Even though new building blocks are created, many building blocks from 1 to 2 years ago (such as eval techniques or frameworks for using vectorDBs) are still very relevant today.

~ A. Ng

In the age of mainstream AI Engineers, mature lego blocks rule.

They are the ones you should learn first.

Yes - there are seed-change things that happen, but the way we build simple prompted applications, or simple RAG applications, and then ship them to production has remained very much the same since a few years ago.

Yes, agents are still being figured out, including foundational definitions (see What is an Agent), and MCP is all the rage (A2A didn’t quite hit the same resonance!).

However, to focus on the edge of hype is to lose the forest for the trees.

Just as we can study LLM Foundations, we can study AI Engineering foundations today, and we should, one key pattern and lego block at time.

Like this:

Set up your Dev Environment

Build your

first app, promptedEvaluated your

first app, with a prompt!Deploy that app with a vibe-coded front end, E2E

Build your second app, this time with retrieval (

RAG)Evaluate your

RAGapp, considering how to adapt prompts to assess retrieval (hint: there are existing frameworks for this!)Can you deploy your

RAGapp E2E? Does the UI or UX need to change?

Build your third app, this time with

agentic reasoningEvaluate your

agentapp…Deploy your

agentapp…

From here, you may have noticed we’ve alreay arrived at bonus territory because we’ve been building end-to-end; that is, with front end user interfaces.

Bonus: Product skills. In some companies, engineers are expected to take pixel-perfect drawings of a product, specified in great detail, and write code to implement it. But if a product manager has to specify even the smallest detail, this slows down the team. The shortage of AI product managers exacerbates this problem. I see teams move much faster if GenAI Engineers also have some user empathy as well at basic skill at designing products, so that,

given only high-level guidance on what to build (“a user interface that lets users see their profiles and change their passwords”), they can make a lot of decisions themselves and build at least a prototype to iterate from.

~ A. Ng

I don’t need guardrails, computer use agents, or autonomous software engineers to make it here. I don’t need MCP servers, more than one agent framework, or to master LeetCode problems or the transformer (though all those things are great blocks for building more complex things!).

But I do need to set up my system to build stuff like I would do if I had an actual AI Engineer job.

And that’s the point!

The reason that “many building blocks from 1 to 2 years ago (such as eval techniques or frameworks for using vectorDBs) are still very relevant today” is because they have created real business value. They have shown themselves to be truly practical AI Engineering tools. They are the tools that the global AI Engineering community has iterated on and used to great affect over the past few years. They are the tools that have gotten “regular hands-on practice building projects” and tools that have had “a community to talk to” and iterate on them.

In general, learn these tools first.

What are they?

Great question!

Our best shot at it is updated every cohort. For June, 2025, and for Cohort 7, you can find all of the tools and techniques outlined here.

But it depends - how much time do you have?

Do you only have 15 hours? Start here.

Do you have 6 weeks? Consider spending them like this;

Week 1: The AI Engineering Challenge & Vibe Check (e..g, Build and Evaluate Your First LLM Application)

Week 2: Build and Evaluate Your First RAG Application

Week 3: Build and Evaluate Your First Agent Application

You can find plenty of materials to help you get started in the Awesome-AIM-Index for free, and you can find us live on YouTube every week covering the latest! Of course, the DLAI short courses are often quite well done too.

From there, let your curiosity call you to the next lego block.

Or maybe, let your community do it (join us here!)

Either way … until next time, keep building 🏗️, shipping 🚢, and sharing 🚀, and we’ll do the same.

Stay on the path.

Cheers,

Dr. Greg